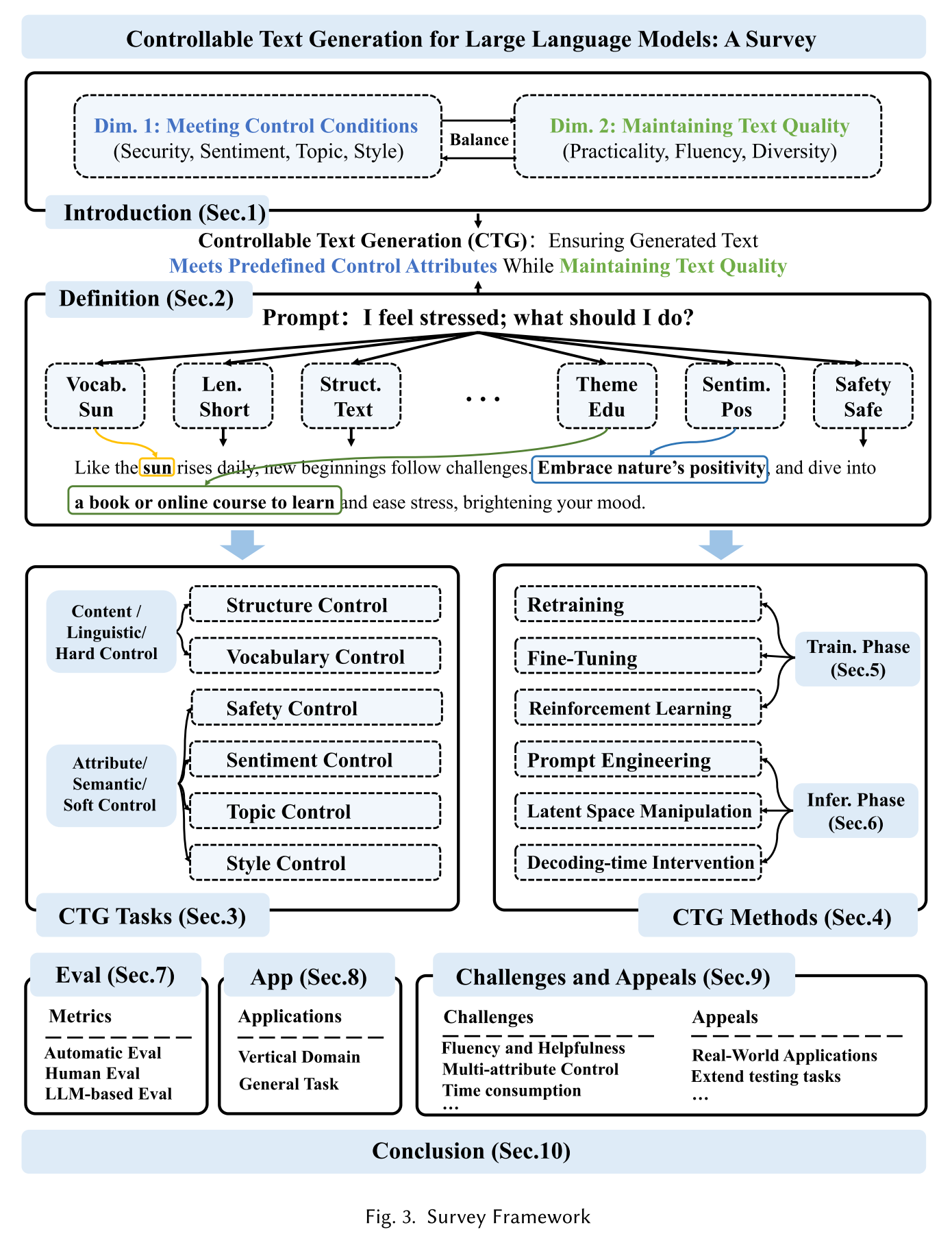

Controllable Text Generation for Large Language Models: A Survey

Liang, Xun, Hanyu Wang, Yezhaohui Wang, Shichao Song, Jiawei Yang, Simin Niu, Jie Hu et al. “Controllable text generation for large language models: A survey.” arXiv preprint arXiv:2408.12599 (2024).

Background and Motivation

In real-world applications, LLMs are expected to cater to specific user needs, not only the accuracy of the generated information, but also the way it is expressed.

What is Controllable Text Generation (CTG)?

Techniques that ensure the generated output adheres to specific control conditions while maintaining high quality.

- Explicit control: direct the model with clearly defined instructions through human-computer interaction (e.g., prompt)

- Implicit control: ensure the generated text meets certain standards without explicitly stated requirements

Why is CTG important?

- CTG empowers LLMs to generate more personalized and context-sensitive content that meets varying user requirements.

- CTG helps adapt the generation to different application scenarios and requirements for most suitable outputs.

Demands of CTG in Two Dimensions:

- Dim. 1: Meeting Predefined Control Conditions

- Tailor the text to meet specific objectives or requirements, making it well-suited for the intended application.

- Control conditions: focusing on particular topic, avoiding harmful content, emulating specific linguistic styles.

- Dim. 2: Maintaining Text Quality

- Fluency: smooth, logically conherent

- Helpfulness: provide real-world value, helping to solve specific problems or offering necessary information

- Diversity: avoid being repetitive or formulaic, reflect innovation and diversity

Core contributions and unique features of this survey:

- Focus on Transformer architecture

- Emphasis on LLMs

- Exploration of model expression and CTG quality

- Innovative task classification framework

- Systematic classification of CTG methods

Section 2: Definition

Foundamental Principles of Text Generation

Transformer-based LLMs generate text by determining the probability of each token given the preceding tokens.

This auto-regressive framework enables LLMs to generate diverse, coherent, and contextually relevant text, ensuring each new token to logically align with the context established by preceding tokens.

Controllable Text Generation (CTG)

Integrate control conditions

My thought: Why must

Primary challenge of CTG: seamlessly incorporating the control conditions

Semantic Space Representation of CTG

- The CTG problem can be framed within an ideal semantic space

, containing all potential semantic vectors the LLM could generate. - The attributes of generated text can be effectively decoupled into distinct dimensions.

- The semantic vectors can be manipulated through a transformation function

. - The effectiveness of transformation is evaluated through an optimization objective.

Section 3: Task Taxonomy in CTG

Content Control (Linguistic Control / Hard Control)

- Structure Control:

- Specific Formats: Generating text that adheres to specific formatting requirements with their unique language and structural norms.

- Organizational Structure: Ensuring that the text has appropriate paragraph divisions, the use of headings, and list arrangements to enhance clarity and readability.

- Length Control: Managing the overall length of the generated text to meet specific requirements, ensuring its suitability for the intended platform or purpose.

- Vocabulary Control:

- Keyword Inclusion: Ensuring that the generated text includes a predefined set of keywords, thereby meeting specific information needs and enhancing the relevance and specificity of the presented information.

- Prohibition of Specific Terms: Preventing the use of potentially harmful or inappropriate terms, thus maintaining the integrity and appropriateness of the content.

Attribute Control (Semantic Control / Soft Control)

- Safety Control:

- Detoxification: The generated text should avoid any form of harmful content.

- Compliance with Laws and Regulations: The text must adhere to all applicable legal and regulatory requirements, e.g., privacy protection and copyright laws (cf. GDPR).

- Sentiment Control:

- Sentiment Orientation: Ensuring that the generated text exhibits a clear sentiment orientation to match specific communication purposes and hence aligns the emotional tone with the context or intended impact on the audience.

- Style Control:

- General Style Control: The generated text should meet the needs of specific occasions, industries and social environments.

- Personal Style Control: The generated text should mimic a specific writing style, and individual expression habits and preferences.

- Topic Control:

- Thematic Consistency: The generated text should adhere to the specified theme, i.e. aligning with the expected knowledge and interests of the target audience.

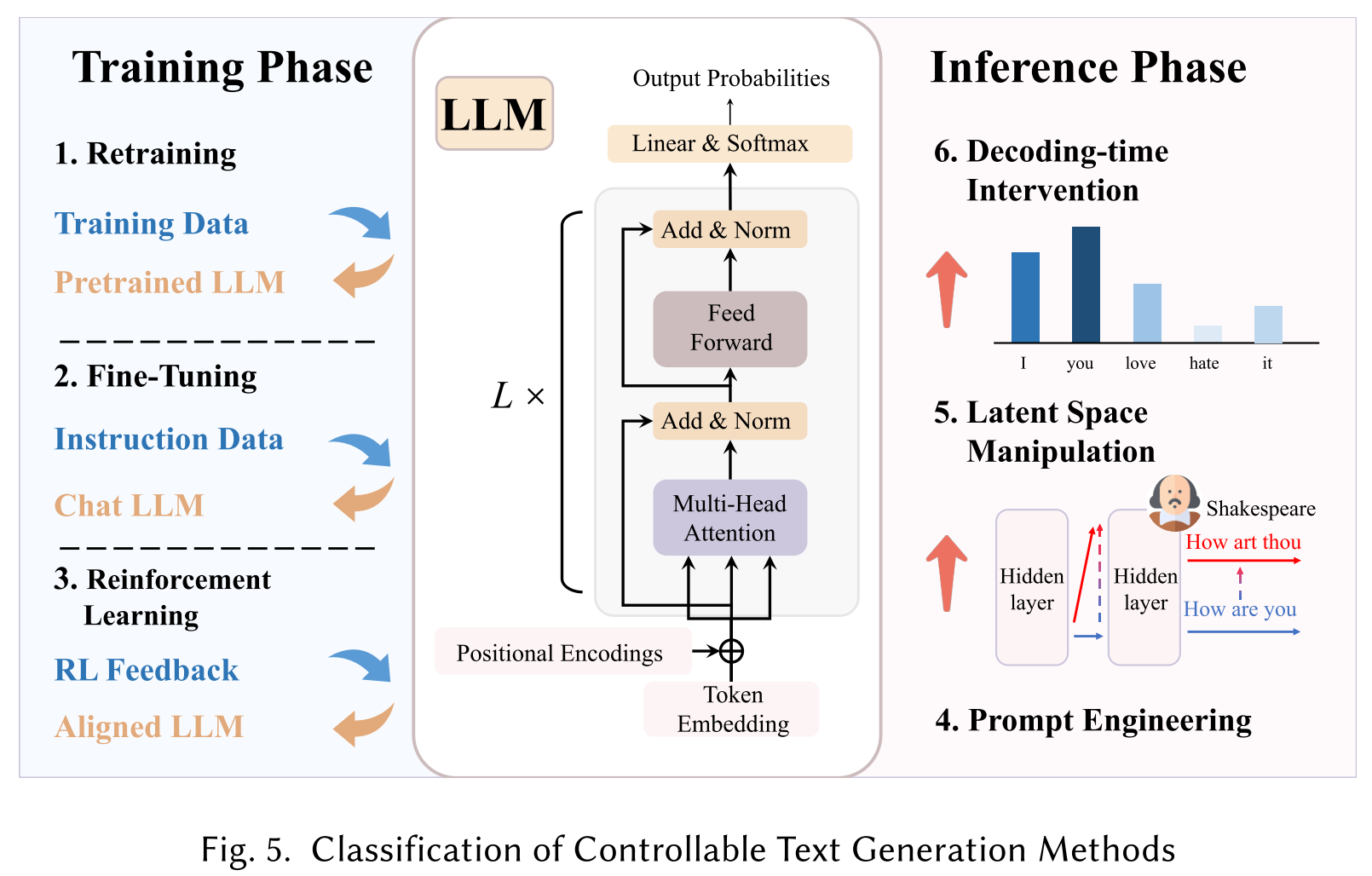

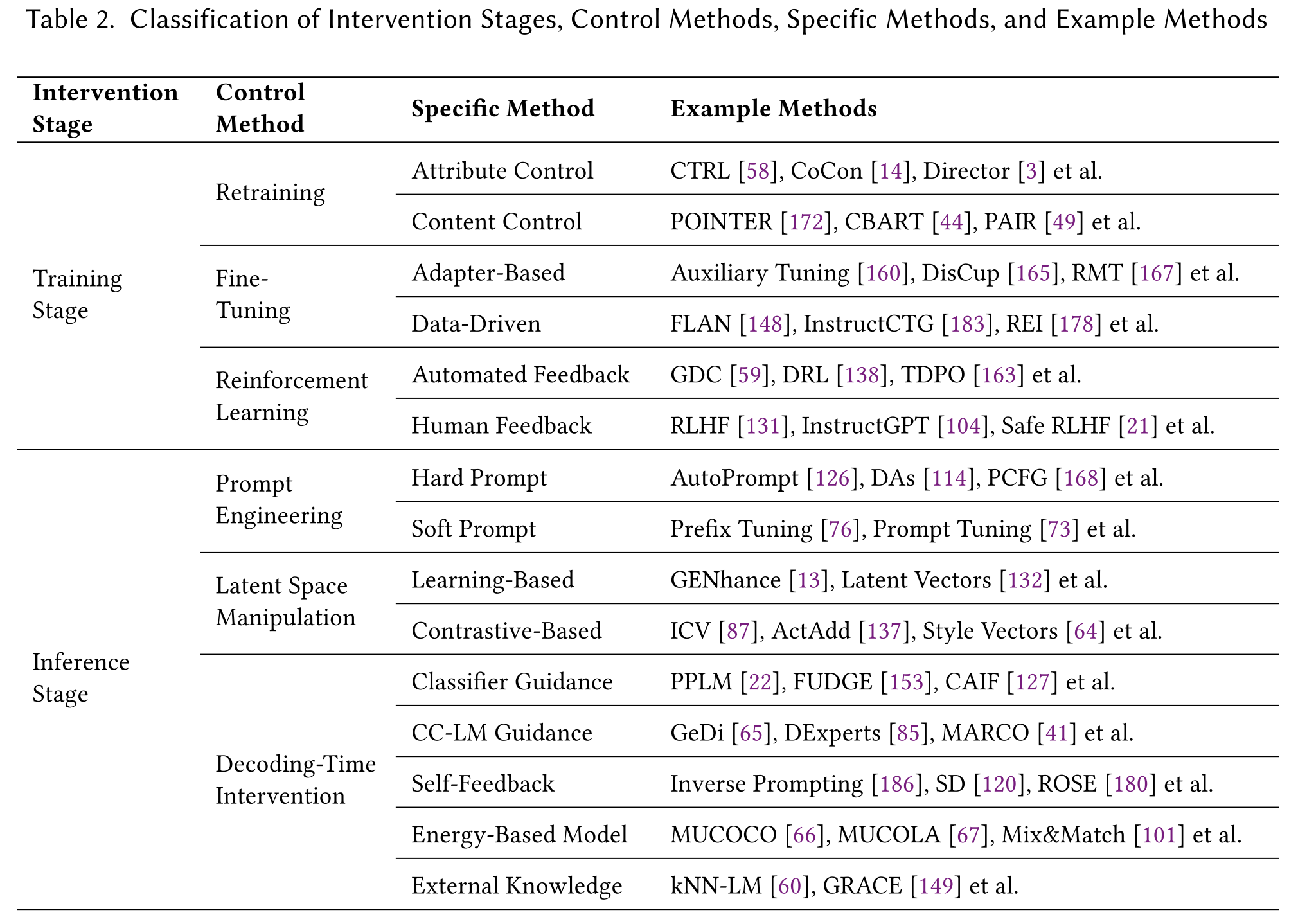

Section 4: Method Taxonomy of CTG

Training Stage Methods

- Retraining: Train models from scratch using datasets specifically designed to reflect the desired control conditions.

- Advantage: allows for adjustments in model architectures

- Fine-Tuning: Fine-tune pre-trained models.

- Advantage: requires relatively less data and computational resources than retraining

- Reinforcement Learning: Employ reward signals and iterative optimization to guide model outputs towards specific control objectives.

- Advantage: particularly well-suited for complex tasks

Inference Stage Methods

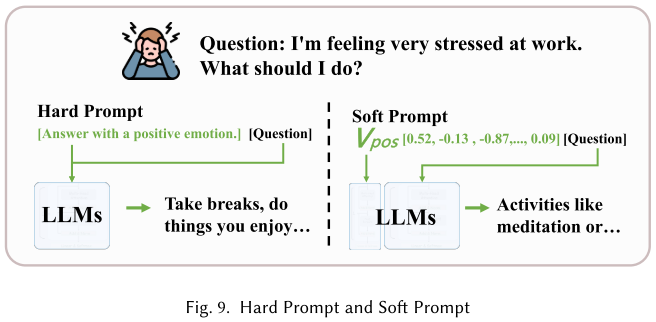

- Prompt Engineering: Manipulate input prompts, either in natural language (hard prompts) or continuous vector embeddings (soft prompts), to steer the generation process.

- Advantage: does not update model parameters, suitable for quickly adjusting generation strategies

- Latent Space Manipulation: Adjust activation states within the model’s hidden layers.

- Advantage: precise control without updating model parameters, especially effective for attribute control

- Decoding-time Intervention: Modify the probability distribution of the generated output, or apply specific rules during the decoding process to influence word selection. Use classifiers or reward models to evaluate generated segments and make real-time adjustments during decoding.

- Advantage: real-time adjustment during decoding, plug-and-play, flexibility for dynamic adjustments

Section 6: Inference Phase Methods

Prompt Engineering

- Guide the model in generating the desired text by providing clear instructions or examples.

- efficient few-shot learning in resource-limited scenarios

- hard prompts:

- discrete

- expressed in NL

- use natural language queries or statements to directly guide the model

- soft prompts:

- continuous

- trainable embeddings

- embed specific vectors in the model’s input space to guide its behavior

- Advantages:

- allows for adjustments during deployment without retraining the model

- does not require additional training data, resources, or extended inference time

Hard Prompt

- predefined trigger words or text prompts

- Advantages:

- straightforward and easy to understand

- without additional fine-tuning

- Limitations:

- limited fine-grained control

- highly sensitive to word choice (even minor changes can significantly impact generation quality)

- Examples:

- AutoPrompt

- Dialogue Acts

- PCFG (Probabilistic Context-Free Grammar)

Soft Prompt

- continuous, trainable vector embeddings

- Advantages:

- more flexible and fine-grained control

- without updating the model parameters

- effective in handling complex attributes or multi-faceted control

- Disadvantages:

- interpretability

- initial tuning

- Examples:

- Attribute Alignment

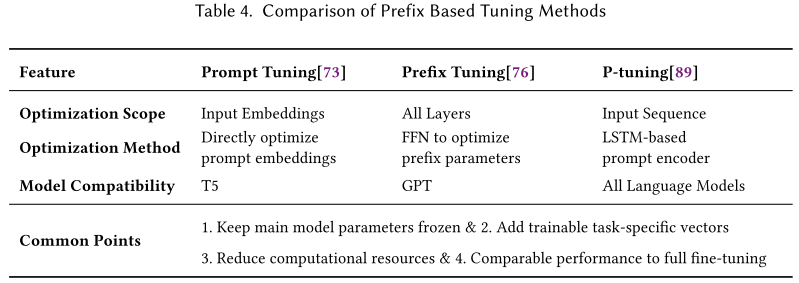

- Prefix-Tuning

- Prompt-Tuning

- P-Tuning

- Optimization methods for soft prompt control vectors:

- Contrastive Prefixes

- T5 Encoder-Decoder Soft Prompt Tuning

- Prompt-PPC (Plug-and-Play Controller with Prompting)

- PPP (Plug and Play with Prompts)

- Challenge in multi-attribute control tasks: attribute interference

- Control signals for different attributes may conflict, making it difficult for the generated text to satisfy all requirements simultaneously.

- Solutions:

- Discrete: estimate the attribute space through an autoencoder and iteratively searching for the intersection of attribute distributions.

- Tailor (Text-AttrIbute generaL contrOlleR): multi-attribute control method using pre-trained continuous attribute prompts.

- Prompt Gating: attach trainable gates between each prefix.

- Title: Controllable Text Generation for Large Language Models: A Survey

- Author: Der Steppenwolf

- Created at : 2024-12-18 23:11:10

- Updated at : 2025-06-22 20:46:50

- Link: https://st143575.github.io/steppenwolf.github.io/2024/12/18/CTGSurvey/

- License: This work is licensed under CC BY-NC-SA 4.0.